Cloud vs. On-Prem AI

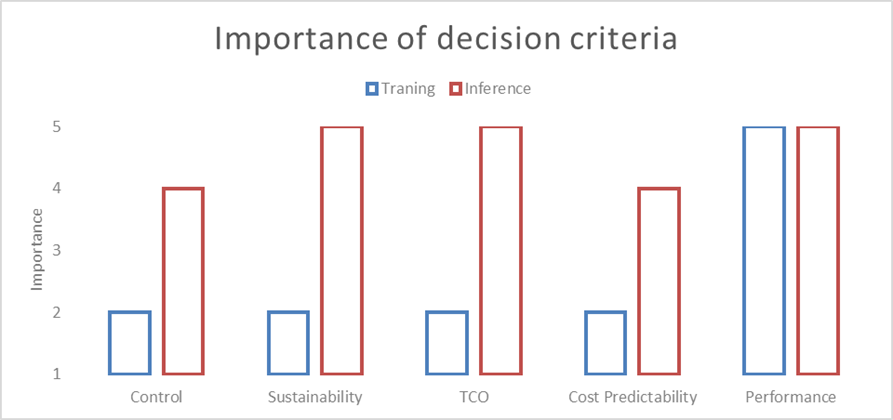

The graph highlights how the importance of various decision criteria changes depending on where a customer is in their AI journey, specifically between the training and inference stages:

Cloud solutions are ideal for starting your AI journey and experimenting, provided compliance requirements and data-sharing with cloud providers align. However, the needs change as your AI workloads transition into production (inference). At this stage, you require a scalable solution that delivers high performance, is cost-effective, ensures predictable expenses, and remains sustainable. We often see significant benefits in owning an AI stack, whether on-premises or hosted in an energy-efficient data centre in the Benelux or Nordics, managed by your team, or fully backed by MDCS.AI.

MDCS.AI offers objective insights to help you compare cloud-based and on-premises AI solutions, focusing on performance, cost predictability, sustainability and scalability. While we acknowledge the value of cloud solutions, we’ve found that owning an AI stack provides greater control, efficiency, and scalability. Our analysis streamlines decision-making, ensuring you select the best infrastructure for your unique needs.

Control

During the training phase, the need for control over the infrastructure is generally less critical, as the focus is often on experimentation and flexibility, which makes cloud solutions appealing. However, as AI workloads move into the inference stage, control becomes much more important. This is because owning the infrastructure allows for tighter integration, better data management, and enhanced security.

Sustainability

Sustainability is typically a lower priority during the initial training phase, focusing on developing models and testing hypotheses. However, as models transition into production (inference), energy consumption and environmental impact become significant factors. This shift is particularly relevant for organisations aiming to reduce their carbon footprint, making a sustainable on-premises or hosted solution more appealing.

TCO (Total Cost of Ownership)

During training, the TCO is not always a primary concern because the focus is on short-term experimentation, and cloud costs can be more manageable. However, as inference workloads scale, TCO becomes crucial. Owning the AI stack can provide significant cost savings over time, especially when the demand for compute increases, making it necessary to manage costs effectively.

Cost Predictability

Performance

Performance is a key concern throughout both the training and inference phases. However, as the demand for compute increases during inference, ensuring high performance becomes even more critical. This need for high compute power and real-time processing often drives organizations to consider an on-premises AI stack that can be optimized for maximum efficiency.

Transition

The transition from training to inference often represents a tipping point where the emphasis shifts from flexibility and initial experimentation to a focus on control, cost management, and performance. MDCS.AI helps organizations navigate these changes, providing solutions that match the specific needs of their AI journey.